The top 15 Technical SEO strategies to boost website rankings:

- Enhance website loading speed

- Implement Secure Sockets Layer (SSL)

- Optimize for mobile devices

- Create an XML sitemap

- Utilize Robots.txt file

- Fix broken links

- Implement structured data markup

- Ensure a flat site architecture

- Canonicalize duplicate content

- Optimize URL structure

- Leverage browser caching

- Minify CSS, JavaScript, and HTML

- Implement breadcrumb navigation

- Set up 301 redirects for moved content

- Monitor and fix crawl errors

Technical SEO plays a massive role in improving your search rankings. If your website isn’t optimized, search engines will have difficulty crawling, indexing, and ranking your pages. This can result in lower visibility and lost traffic.

To stay ahead, optimize site speed, mobile-friendliness, and structured data. A fast-loading, mobile-friendly website with clean architecture helps search engines understand your content better. Fixing broken links, using descriptive URLs, and setting up 301 redirects also improve user experience and search engine results.

A Reddit user says that technical SEO should come first because if a website has major technical issues—like rendering problems—Google may struggle to index or understand its content. No matter how well-optimized the on-page elements are, they won’t matter if search engines can’t access them properly. However, once technical SEO is solid, content becomes the key driver for rankings. A balanced approach is best: strong technical foundations ensure visibility, while high-quality, relevant content keeps users engaged and improves rankings. Ignoring either can limit success, but technical SEO lays the groundwork for everything else.

This blog post shares 15 technical SEO strategies to boost your website rankings. These SEO tips will help you enhance your website’s performance, drive organic traffic, and improve search rankings.

1. Enhance Website Loading Speed

A slow website affects user experience and search rankings. Google considers page speed a ranking factor, making it essential to optimize loading times. Improve site speed by:

- Compressing images using tools like TinyPNG or WebP

- Using a Content Delivery Network (CDN) to distribute content faster

- Enabling lazy loading for images and videos

- Reducing server response time and optimizing hosting

- Minimizing HTTP requests by combining CSS and JavaScript files

Use Google PageSpeed Insights and Core Web Vitals to measure and improve website speed.

2. Implement Secure Sockets Layer (SSL)

Security is a ranking factor. A secure website with HTTPS builds trust and protects user data. To implement SSL:

- Install an SSL certificate from a trusted provider

- Redirect all HTTP URLs to HTTPS using 301 redirects

- Check for mixed content issues using Google Search Console

SSL encryption improves search engine indexing and protects against cyber threats.

3. Optimize for Mobile Devices

Google follows a mobile-first indexing approach, meaning it primarily uses the mobile version of a site for ranking. Ensure a mobile-friendly website by:

- Using a responsive design that adapts to different screen sizes

- Running a Google Mobile-Friendly Test

- Optimizing images and videos for mobile

- Ensuring buttons and text are easy to interact with on touchscreens

A well-optimized mobile experience boosts SEO rankings and user engagement.

4. Create an XML Sitemap

An XML sitemap helps search engines crawl and index website pages efficiently. To create and submit an XML sitemap:

- Generate one using tools like Yoast SEO or Google XML Sitemaps

- Ensure it includes only relevant pages, avoiding duplicate content

- Submit it to Google Search Console

Regularly update the sitemap to reflect changes in site structure.

5. Utilize the Robots.txt File

The Robots.txt file guides search engines on which pages to crawl or ignore. A well-structured Robots.txt file helps manage crawl budget and indexing. Best practices include:

- Blocking low-value pages (e.g., admin pages, duplicate content)

- Allowing search engine bots to access important sections

- Testing the file in Google Search Console to avoid blocking essential pages

Improper Robots.txt settings can prevent important pages from being indexed.

6. Fix Broken Links

Broken links harm SEO rankings and user experience. They lead to crawl errors and wasted crawl budgets. Identify and fix broken links by:

- Using tools like Google Search Console, Ahrefs, or Screaming Frog

- Redirecting broken URLs to relevant pages with 301 redirects

- Updating internal links to point to existing pages

Regularly audit and fix broken links to maintain a seamless website structure.

7. Implement Structured Data Markup

Structured data, or schema markup, helps search engines understand content better. It enhances search results with rich snippets, improving click-through rates (CTR). Implement structured data by:

- Using JSON-LD format for easy integration

- Adding markup for FAQs, products, reviews, and events

- Testing with Google’s Rich Results Test

Properly structured data improves a website’s visibility in search engine results pages (SERPs).

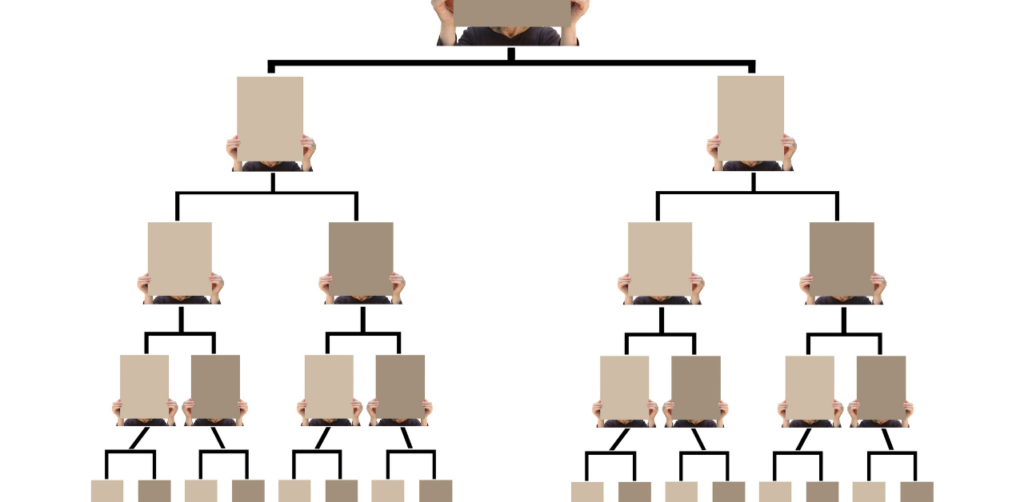

8. Ensure a Flat Site Architecture

A flat site architecture makes web pages accessible within a few clicks from the homepage. This improves crawl efficiency and search rankings. Best practices include:

- Using a simple, logical URL hierarchy

- Linking key pages from the homepage

- Keeping navigation intuitive for users and search engines

A well-structured website enhances user experience and SEO performance.

9. Canonicalize Duplicate Content

Duplicate content confuses search engines and affects rankings. To resolve duplicate content issues:

- Use canonical tags to point to the preferred version of a page

- Avoid duplicate URLs with parameters

- Set up 301 redirects for duplicate pages

Canonicalization ensures search engines index the correct pages, preventing ranking dilution.

10. Optimize URL Structure

SEO-friendly URLs improve search engine indexing and user experience. Follow these URL optimization best practices:

- Use descriptive URLs with relevant keywords

- Keep URLs short and readable

- Avoid special characters and unnecessary parameters

An optimized URL structure makes pages easier to crawl and rank.

11. Leverage Browser Caching

Browser caching improves page speed by storing static files locally on a user’s device. This reduces load times for returning visitors. To enable caching:

- Configure cache settings in the .htaccess file or hosting panel

- Use Google PageSpeed Insights to check caching issues

- Set cache expiration for images, CSS, and JavaScript files

Faster page speed leads to better SEO rankings and user retention.

12. Minify CSS, JavaScript, and HTML

Excessive code slows down web pages. Minify CSS, JavaScript, and HTML to improve site speed by:

- Using tools like MinifyCode or Autoptimize

- Removing unnecessary white spaces and comments

- Combining multiple CSS and JavaScript files

A leaner codebase improves website performance and search rankings.

13. Implement Breadcrumb Navigation

Breadcrumb navigation helps users and search engines understand site hierarchy. It improves internal linking and SEO rankings. To add breadcrumb navigation:

- Use structured data for breadcrumbs

- Ensure breadcrumbs match the site structure

- Keep breadcrumb links descriptive and concise

Breadcrumbs improve user navigation and search engine results.

14. Set Up 301 Redirects for Moved Content

A 301 redirect ensures users and search engines are directed to the correct URL when content is moved. This prevents traffic loss and SEO penalties. Best practices include:

- Using Google Search Console to monitor redirect issues

- Redirecting old URLs to the most relevant pages

- Avoiding redirect chains and loops

Proper 301 redirects maintain search rankings and user experience.

15. Monitor and Fix Crawl Errors

Crawl errors prevent search engines from indexing web pages correctly. Regularly check and fix errors using:

- Google Search Console for index coverage reports

- Screaming Frog to detect crawl issues

- Updating broken internal and external links

Addressing crawl errors ensures efficient indexing and higher search engine rankings.

What is the Role of an SEO Specialist in Technical SEO?

Technical SEO involves optimizing a website’s infrastructure, code, and performance to help search engines understand and index its pages. An SEO specialist focuses on the following:

- They ensure website speed, mobile optimization, and structured data are correctly implemented.

- Managing robots.txt, XML sitemaps, and canonical tags helps search engines crawl and index content properly.

- They conduct site audits to identify and fix broken links, duplicate content, and indexing issues.

- They improve page load time using CDNs, caching strategies, and image optimization techniques.

- They use tools like Google’s Mobile-Friendly Test to ensure a site works well on mobile devices.

- Implementing schema markup helps generate rich snippets and improve visibility in search engine results.

- Implementing HTTPS, SSL certificates and resolving mixed content issues enhances website security.

What are the Main Differences Between Technical SEO and On-page SEO?

Technical SEO deals with the backend elements that help search engines understand and index a website. It ensures a site is structured for search engine crawlers and provides a smooth user experience. On the other hand, on-page SEO focuses on optimizing content and elements visible on web pages to improve search engine rankings and user engagement. It involves using relevant keywords, meta descriptions, and internal links.

Strengthen Your Website with Expert Technical SEO

Technical SEO is the backbone of a well-optimized website. Even the best content can struggle to gain visibility without a solid technical foundation. Web Search Marketing understands the dos and don’ts of technical SEO. Our SEO specialists know how to fine-tune your site for better rankings and long-term success. Book a consultation today and take the next step toward higher search visibility.